Performing the Wizards of Oz – Written by Martin Porcheron

The Wizard of Oz experiment (WOz) is a research approach in which an intelligent system is presented to users, typically as part of a research study. Unbeknownst to the user, the presented intelligence is a mirage, with the gubbins of the supposedly intelligent system run by a human operator pulling metaphorical levers. In other words, the intelligence is a fiction. In an article presented at ACM CSCW 2020, and due to be published in Proceedings of the ACM on Human-Computer Interaction, we take a look at our use of the method and unpack the interactional work that goes into pulling of the method. In other words, we pull back the curtain on the method. This blog post is a bit of a teaser, focusing solely on some of the elements of collaboration that we identified in the article.

Alternatively, instead of (or in addition to) reading this blog post, you can also watch the presentation on YouTube (it was a virtual conference for 2020 for obvious reasons). This presentation includes a short video clip from the data we collected if you want to get a feel for how the study unfolded.

As you can probably guess, the method’s name comes from the L Frank Baum novel The Wonderful Wizard of Oz. Early use of the method in HCI took less exciting names like ‘experimenter in the loop’1. A WOz approach offers the ability to prototype and potentially validate—or not—design concepts through experimentation without the costly development time that a full system may require2. Approaches have included simulating things such as a ‘Listening Typewriter’3 and public service information lookup for a telephone line4. In WOz, different elements may be simulated, ranging from database lookup through to mobile geolocation tracking5. Due to the recent commericalisation of voice recognition technologies, there is a plethora of literature using the approach for studies in voice interface design, with natural language processing being the simulated component. I’d guess that’s because building natural language interfaces is a costly endeavor (monetarily and timewise).

In our paper, we look at the use of a voice-controlled mobile robot for cleaning, where we simulated the natural language processing of the voice instruction, and conversion of this into an instruction to a robot (i.e. the Wizard listened to requests and controlled the robot). We were running RoboClean as part of a language ellicitation study, although that’s really the focus of the paper. Cruically our study required two researchers to operate the proceedings: one scaffolded the participant interaction and the other performed the work of the ‘Wizard’, responding to participants’ requests and controlling the vacuum.

Collaboration was key

In the paper we go into much more detail, focusing on the various aspects needed to pull off such a study, starting with the how the ‘fiction’ of the voice-controlled robot is established and presented to users, through to how the researchers running the study attend to a technical breakdown while running the study. We progressively establish the fiction as an interactional accomplishment between all three interactants (i.e. the two researchers and the participant).

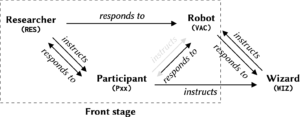

The researcher, who in our study stands with the participant, introduces the scenario, shows the robot to the participant, and guides them into instructing it (i.e. they scaffold the participant’s involvement in the study). The participant ostensibly talks to and responds to the vacuum. The Wizard—who is listening—responds to the request, in accordance with the fiction presented by the researcher and the notions of what a voice-controlled vacuum robot might reasonably respond to. It’s the Wizard whom the participant is really instructing in such a study (as the voice-controlled robot is but a fiction). The researcher standing with the participant then must performatively account for the actions taken by the Wizard according to that fiction. In other words, whatever ‘the robot does’, the researcher must attribute its actions to the robot to conceal the machinations of the Wizard.

There are other challenges, of course, that make this harder: the Wizard must respond to the participants’ requests in a way consistent with the fiction quickly and consistently in order to ensure the methodological validity of the study. We also discuss a situation in the article where there is a technical glitch with the robots, requiring both researchers to work together in an improvised manner to uphold the secrecy of the Wizard, while trying to collaboratively resolve the issues face.

Given the dramatic naming of the approach, we describe this accomplishment as a triad of fiction, taking place on the ‘front stage’ (with the Wizard working ‘backstage’). Around the same time, others also referred to this as ‘front channel’ and ‘back channel’ communication6. See the figure for how we pictorially represent the communication between the various interactants in our study.

Practical takeaways

Above I’ve focused on the collaboration required to pull of the study, we also devote a fair chunk of the article to detailing the practical steps we took in implementing the study design and running the study. With this, we discuss how we used various technologies, piecing them together to present a believable ‘voice-controlled robot’. We had a shared protocol document that both the researcher and the Wizard used to maintain awareness of each other’s actions and an outline script that detailed the sorts of requests that the robot would respond positively (or not) to, and this was progressively updated throughout the studies. While we frame running a WOz study as a performance, we were keen to stress the methodological obligations involved too: the performance must be undertaken according to methodologically valid research practice. We argue this requires meticulous care and attention, and that this is driven by the collaboration of the researchers throughout.